Interactive active listening

In the conversation, LISA listen, learn and respond to guide and engage consumers at every interaction.

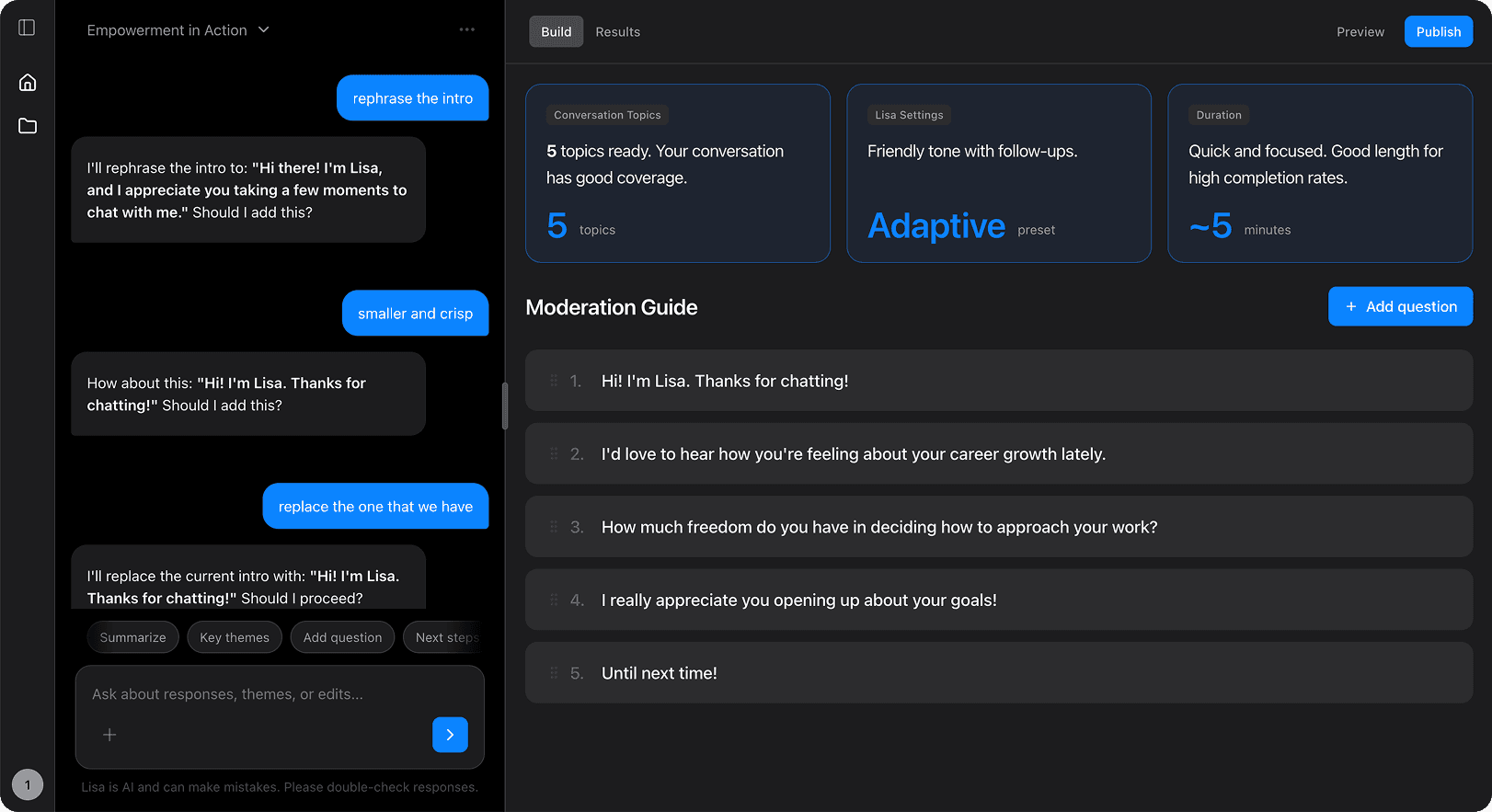

The conversation UI consists of 3 parts. Moderator + Dialogue, Reply block and Navigation. All the parts are modular and dependent on how settings were defined in the builder. Since the essence of a human conversational experience is not just verbal communication but also non verbal cues.

It was essential to make the moderator reactive to the user input and the responses provided in the reply blocks. This included animations that support non verbal cues like excitement, alerting, listening, observing etc

How might we make animations interactive?

How might we make animations development friendly?

Developers tried building animation using GSAP animation framework but it didn’t work as expected. It was slow and not reactive.

Close collaboration,

One step at a time

I led the interaction design of moderator. Defined moderator behaviours, composition, animations, interaction flow for each tool and transition animation between tools and development handover.

Mapping the essence of an engaging human conversation (verbal and non-verbal) into Lisa's behaviours.

The approach was to transform the elements of an engaging conversation into LISA's behaviours aimed make the interactions engaging by generating personalised moderator text, Changing background colours and animating the moderator.

“Spontaneity and adaptability” are what make conversation feel alive. It’s when we adjust in real time, pausing when there’s silence, rephrasing after confusion, or slowing down when someone rushes.

“Dynamic dialogues” bring that to Lisa. She changes prompts in response to gibberish, silence, or speeding. It Keeps the flow natural, even when things don’t go as planned.

“Responsive sentiments” are what make dialogue feel alive. It’s when you respond to the emotional state of the other person, not just their words empathy, irritation, humor, care, all flowing back and forth.

“Dynamic colour schemes” are what make dialogue feel alive. It’s when UI responds to the emotional state of the Lisa by changing background colors dynamically, not just her words in dialogues.

Lisa uses subtle animations like pulses, fades, or bouncing to signal presence and emotion. These cues act like digital body language, supporting the flow without interrupting it.

Rive turned out to be the most efficient animation framework with better control on interactions by designers, saving hours of development work.

Previously flutter, Rive is used by duolingo that helps making it highly interactive to user inputs in the application.

Using statemachines and adding logic the animations by adding conditions.

It saves hours of work for developers figuring out interactions and implementing animations in the code.

We mapped conversations like a dialogue between people, focusing on how listening, learning and responding create flow.

Each screen lays a unique combination of reply blocks(open end, selectors etc) or research tools. Whether its a multiple choice selection, rating, scale etc

When navigating layouts it was important to break the flow into frames/ steps.

So it is easier to understand how Lisa should behave at each step and would eventually help the developers to onboard.

V1 - "Tool specific state-machine"

To access the state machine it needs to trigger for each tool before loading.

LISA’s behaviour for each tool is constant and not a variable.

All the behaviours of lisa are not accessible at any point in the conversation

V2 - "one state-machine for all"

State machines are standardised for all the tools

No need to trigger for each tool.

LISA’s response is a variable and can be accessed at any point in the conversation when triggered.

More power to the devs to control LISA’s behaviour

Final version

Development handover

Dynamic dialogues adds spontaneity and adapts to the conversation.

Open-ended questions are tricky: every response is different, and some come in as gibberish, speeder, or off-topic.

Instead of scripting endless prompts, we used AI to detect patterns, pick up sentiment in 70+ languages, and respond automatically.

One setup, scaled everywhere improving engagement.

Gibberish input

Interactive animations shows the non verbal behaviour and dynamic colour scheme reflects the sentiment of LISA.

Background colors shifted with sentiment or adapted to brand colors, while subtle animations made the moderator feel more empathetic, reacting to button presses or selections.

The idea was to keep these behaviors flexible and productized, so in the future they could either match client branding or be customized directly in the builder.

Conversation at the moment

Impact so far,

~100hr saved in development time, ~20% increase in engagement, improved average rating from 4.0 to 4.6 out of 5

From linear and static forms

To dynamic and engaging conversations

Staying curious about new technologies and design trends is essential to creating relevant and forward-thinking products. For me, exploration isn’t about chasing novelty. It’s about understanding how evolving tools can enhance both the user experience and the design-to-development process.

I’ve always considered developers as my closest collaborators. Working alongside them early and often helps bridge intent with implementation, ensuring design decisions translate smoothly into the final experience.

Other case studies

Epiphany RBC | 2023 - 2024

Launched Zero to ~120,000 conversations for 7+ clients worldwide, spanning over 14 languages, and resulted in annual savings of approximately €350,000

TwentyEighth Conversation | 2025 - Present

Built end-to-end AI SaaS platform using React, PostgreSQL, and Railway. Integrated OpenAI, Groq, Eleven Labs, and Pinecone for RAG architecture. Currently in beta with early adopters.

Say hello 👋🏽

Copy Mail:

Here and now